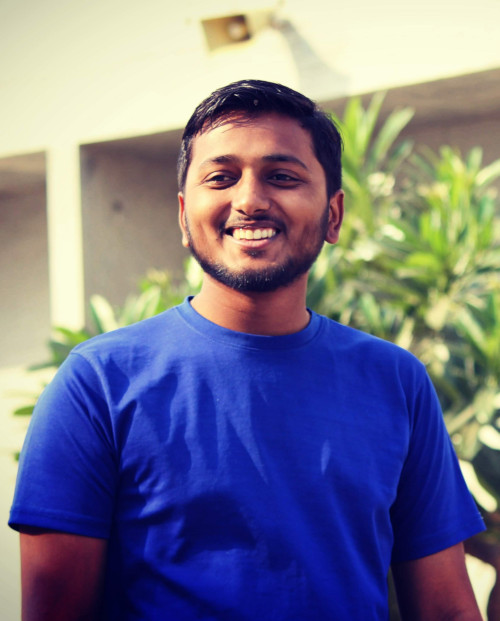

Hi, I’m

Jayesh Manani

A Software Engineer (Studying M.Sc. Cyber Security at Brandenburgische Technische Universität Cottbus-Senftenberg)

I built softwares to easy day to day life, and help people and organizations to give easy and reliable solutions which they can use efficiently to get their work done. Love to analyse the data. Good at Maths and Statistics, Use machine learning algorithms to build awesome solutions. I use Python and related frameworks to get things done.

Let Us Talk